- 2 Posts

- 2 Comments

Joined 2Y ago

Cake day: Jun 12, 2023

You are not logged in. If you use a Fediverse account that is able to follow users, you can follow this user.

- @[email protected] to

English

English - •

- 2Y

- •

https://github.com/noneabove1182/text-generation-webui-docker (updated to 1.3.1 and has a fix for gqa to run llama2 70B)

https://github.com/noneabove1182/lollms-webui-docker (v3.0.0)

https://github.com/noneabove1182/koboldcpp-docker (updated to 1.36)

All should include up to date instructions, if you find any issues please ping me immediately so I can take a look or open an issue :)

- @[email protected] to

English

English - •

- github.com

- •

- 2Y

- •

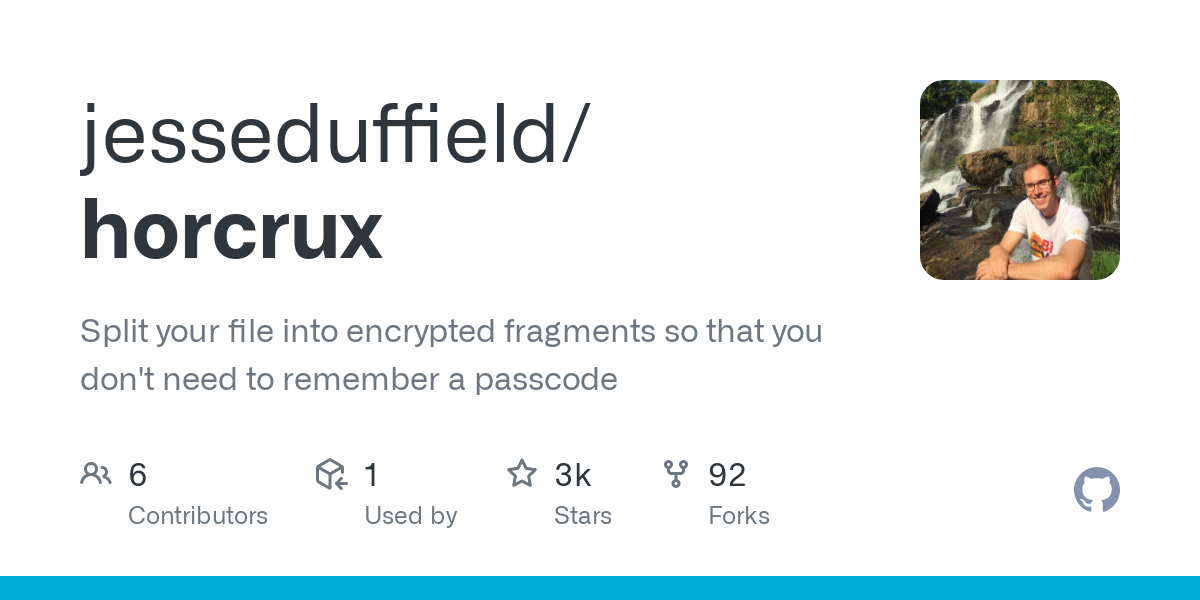

Saw this posted over here: https://sh.itjust.works/post/163355

sounds like a really fun concept that should be shared here too :D

Yes that’s a good comment for an FAQ cause I get it a lot and it’s a very good question haha. The reason I use it is for image size, the base nvidia devel image is needed for a lot of compilation during python package installation and is huge, so instead I use conda, transfer it to the nvidia-runtime image which is… also pretty big, but it saves several GB of space so it’s a worthwhile hack :)

but yes avoiding CUDA messes on my bare machine is definitely my biggest motivation